Unveiling The Truth: Undress Bot's Risks & Safety Guide

Could artificial intelligence be used to create explicit images without consent? The emergence and rapid proliferation of "undress bots" raise deeply troubling questions about privacy, ethics, and the potential for widespread misuse of technology. These tools, powered by sophisticated algorithms, are designed to generate nude or semi-nude depictions of individuals based on existing photographs.

The digital landscape, already complex and often unforgiving, is now confronted with a new breed of threat. While the technology itself might seem innocuous in its abstract forma series of code instructions capable of image manipulationits real-world application presents a significant danger. The speed with which these bots are evolving, coupled with their accessibility and the anonymity afforded by the internet, creates an environment ripe for abuse. Victims of these AI-driven image manipulations face not only the distress of having their likeness weaponized, but also the daunting task of taking action. The legal frameworks struggle to keep pace with technological advancements, leaving individuals vulnerable to significant harm. The very fabric of consent, particularly in the context of image creation and distribution, is being fundamentally challenged. The potential for reputational damage, emotional trauma, and even physical threats arising from this technology underscores the urgent need for both technical and societal solutions.

| Aspect | Details |

|---|---|

| Core Functionality | Algorithms that remove clothing from images, often based on machine learning trained on vast datasets of nude imagery. Some bots also generate entirely new images of people in varying states of undress. |

| Operating Principle | Uses Generative Adversarial Networks (GANs) and other AI techniques to predict and fill in missing image data, essentially "hallucinating" the details of the body beneath clothing. |

| Key Concerns |

|

| Technical Capabilities | Can process images and videos, and some are capable of generating convincing, but fake, images. Many operate with relatively low computational requirements, and are therefore widely accessible. |

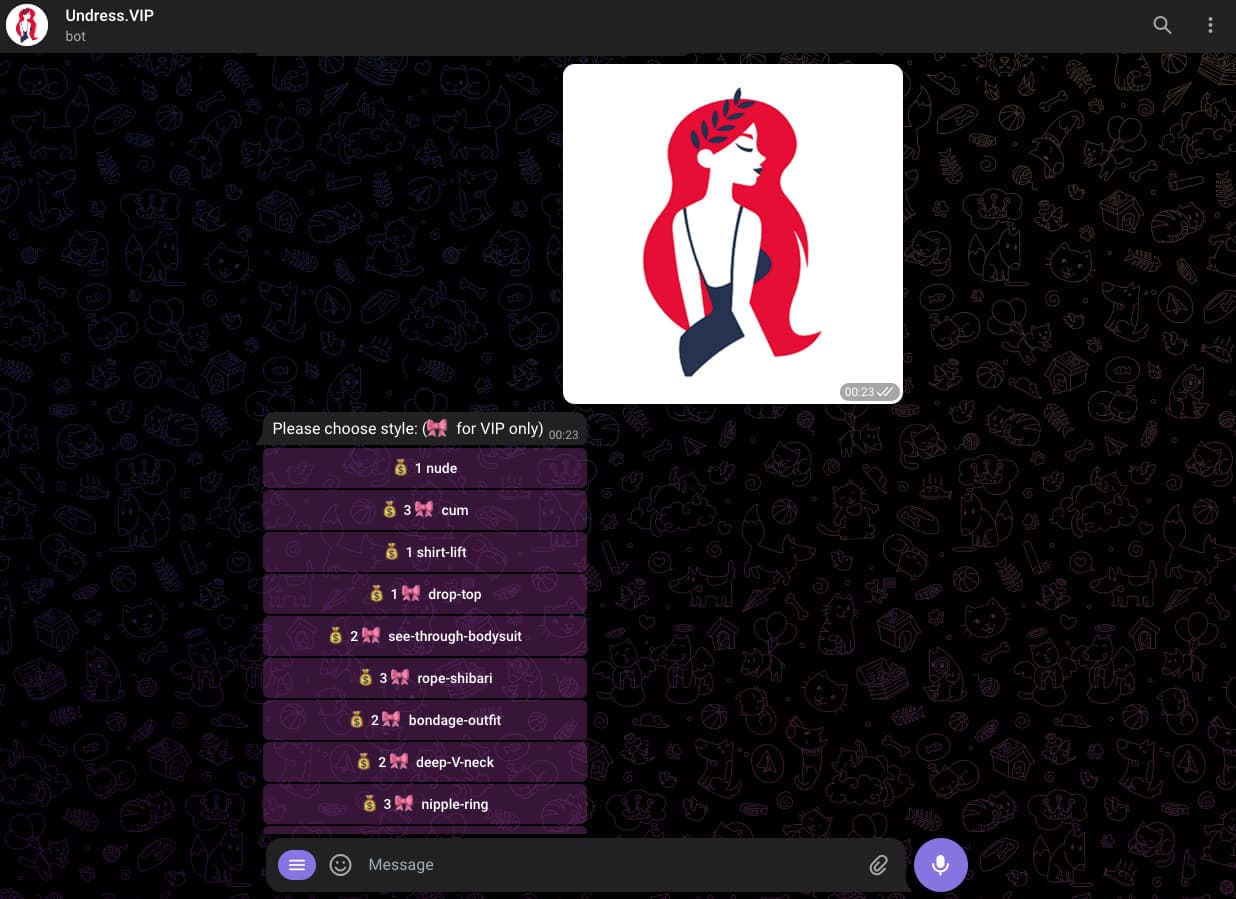

| Current Availability | Varies. Some services are offered as applications, websites, or even telegram bots, whereas others operate in more discreet environments. The accessibility changes frequently as sites are taken down and new services appear. |

| Victimology | Anyone can be a potential victim. The risk is increased when identifiable images are posted online, or when a person has a public online profile. |

| Potential for Misuse |

|

| Ethical Considerations |

|

| Legal Framework | The legal landscape is fragmented and evolving. Laws related to revenge porn, image manipulation, and copyright infringement may apply, but enforcement is challenging. New legislation is needed to address the specifics of AI-generated content and its misuse. |

| Countermeasures |

|

| Future Developments | Continued refinement of algorithms, making the images more realistic and harder to detect. The possibility that these tools may be applied to real-time video streams. The potential for this technology to be integrated into a variety of applications and devices. |

| Further Reading | Electronic Frontier Foundation |

The ease with which these "undress bots" operate is alarming. Many require little technical expertise to use. A user typically uploads a photograph, and the bot, using its trained algorithms, attempts to remove the clothing and create a nude or semi-nude image. The output can vary in quality, depending on the complexity of the image, the training data of the bot, and the specific algorithm used. However, the sophistication is rapidly increasing. Early versions produced often distorted or unrealistic results. Current iterations, however, are capable of generating images that are difficult to distinguish from genuine photographs.

The issue of consent is at the heart of the problem. The creation and distribution of these images is often done without the knowledge or permission of the person depicted. This non-consensual image manipulation violates fundamental rights to privacy and bodily autonomy. When the image is shared online, particularly without the victim's consent, the damage is magnified. The images can spread virally, causing widespread embarrassment, shame, and emotional distress. The impacts can extend beyond the emotional realm. Careers can be damaged. Relationships can be destroyed. Individuals can be subjected to harassment, stalking, and even threats of violence. The use of "undress bots" represents a new form of digital violence with potentially devastating real-world consequences.

The anonymity afforded by the internet exacerbates the challenges of addressing this technology. Those who develop and distribute the bots often operate from the shadows, making it difficult to trace their identities and hold them accountable. The platforms that host these services may be located in jurisdictions with lax regulations or limited resources for law enforcement. The very nature of online sharing, which facilitates rapid dissemination, makes the problem hard to contain. Takedown requests, while sometimes effective, are often reactive measures that can't stop the initial spread. The victims face an uphill battle against a system designed to allow the content to proliferate with minimal accountability.

Another area of concern is the potential for "undress bots" to be used for malicious purposes. The technology can be employed to create deepfakes, or fabricated videos, of individuals, potentially to damage reputations or spread false information. These generated images can be used for extortion, blackmail, or other forms of cybercrime. The tools can be used in the context of political disinformation campaigns, where the fabricated images are designed to smear public figures. It also can provide the resources to create and distribute revenge pornography. The potential for misuse is vast and growing, and its critical that legislation is designed to prevent any such misuse.

The development of undress bots presents significant challenges to existing legal frameworks. The laws around image manipulation, privacy, and revenge pornography are not always clearly defined. Enforcement can be difficult, especially when the perpetrators are operating anonymously or from other jurisdictions. It is difficult to determine when a generated image constitutes defamation or is illegal. There is a need for updated legislation that specifically addresses the dangers of AI-generated content and provides clear avenues for legal recourse for victims. This includes laws that criminalize the creation and distribution of non-consensual AI-generated images, with penalties that reflect the seriousness of the harms involved.

Beyond legal and technical responses, a societal shift is needed to address the underlying issues. Education is critical. People need to be informed about the risks associated with these tools and how to protect themselves from being targeted. There is a need for greater awareness among people about the consequences of online sharing and the importance of consent. Discussions about consent must extend into the digital space. Open conversations about how technology impacts personal safety, with the involvement of educators, parents, and community leaders, are important. The aim is to promote a culture of respect and responsibility in the online environment.

Technological solutions, too, have a role to play. Researchers and developers are working on ways to detect and flag AI-generated images. This is a rapidly evolving field, with new tools and techniques emerging regularly. Watermarking technology, which embeds identifying information into images, can help track the source of the content and make it easier to identify and remove manipulated images. Cybersecurity measures, such as multi-factor authentication, can also help to protect personal data and prevent images from being compromised. The technological community must continue to develop and implement these kinds of tools to stay ahead of the curve.

The role of tech platforms is critical. Social media companies, websites, and other online platforms need to develop and enforce clear policies regarding the creation and distribution of AI-generated nude content. This includes proactive measures to detect and remove such content. They also must establish clear reporting mechanisms so that victims of this kind of abuse can report images and seek redress. Companies must ensure that their platforms are not being used to facilitate the non-consensual sharing of images and must work to quickly remove any such content when it is reported.

Addressing the problems posed by undress bots requires a multi-faceted approach. There is a need for coordinated action from lawmakers, law enforcement, the technology industry, and individuals. Governments must create and enforce the laws that protect privacy and prevent the misuse of AI-generated images. Tech companies must take responsibility for the content shared on their platforms. People must be vigilant about protecting their personal information and practicing safe online behavior. There is no single solution to this complex problem. It requires a shared commitment to protect individual rights and to foster a culture of respect and consent in the digital age. The long-term effort requires an ongoing dialogue to ensure a future where technology is used responsibly and ethical lines are not crossed.

The rise of "undress bots" signals a critical moment in the evolution of technology and the digital world. As AI continues to advance, it is essential to address the ethical, legal, and social implications of these developments. Failure to do so could lead to the creation of a toxic online environment. It is a reminder that technology, in the absence of safeguards and social responsibility, can be a tool for harm. By working together, however, it is possible to mitigate these risks and build a more secure and equitable digital future.